Towards Data Science - Author Spotlight with Eugene Yan

I was recently interviewed by Towards Data Science as part of their Author Spotlight series. They had fun questions, some of which I’ve not writen about before, so I thought I would share it here too. Have more questions? Please post it on the AMA!

• • •

You took a rather interesting career path into data science. From breaking into the field with a Psychology degree, to landing a “rocketship” role at Lazada, and now as an Applied Scientist at Amazon. Could you share a bit about how you found your way into the field?

I don’t recall starting with data science as a career goal. Back then, I was mostly interested in how people perceive, decide, and behave. That’s why I got into Psychology in the first place. My initial goal after graduation was to be an industrial and organizational psychologist, to help make people more productive and happy at work. Unfortunately, there’re weren’t any roles for fresh graduates (perhaps I wasn’t good enough, haha).

I eventually joined the Ministry of Trade and Industry where I focused on the investment side of things, trying to make it easier and safer for companies to invest overseas. It mostly involved negotiating investment and free trade agreements, analyzing legal text, studying arbitration cases. The travel was exciting but after a while, I missed working with data.

In my free time, I took online courses on statistics, SQL, and R. I also interviewed for entry-level data positions and eventually landed a job at IBM as a data analyst. In my first year, I mostly worked on analytics projects such as building supply chain dashboards and scraping and analyzing Twitter data for brands. After a year, I had the opportunity to join the workforce analytics team where I worked on job demand forecasting and building an internal job recommendation engine. The work was similar to my initial goal of using data to help people at work, it just took me longer to get here.

Nonetheless, another opportunity came to join an e-commerce startup (Lazada). I was curious how working in a startup would be like, after being in government and IBM, and leaped. I’ve written about how that happened and won’t rehash it here. (Lazada eventually got acquired by Alibaba, and after another stint in a health-tech startup, I joined Amazon.)

What is your favorite project or a project you’re particularly proud of?

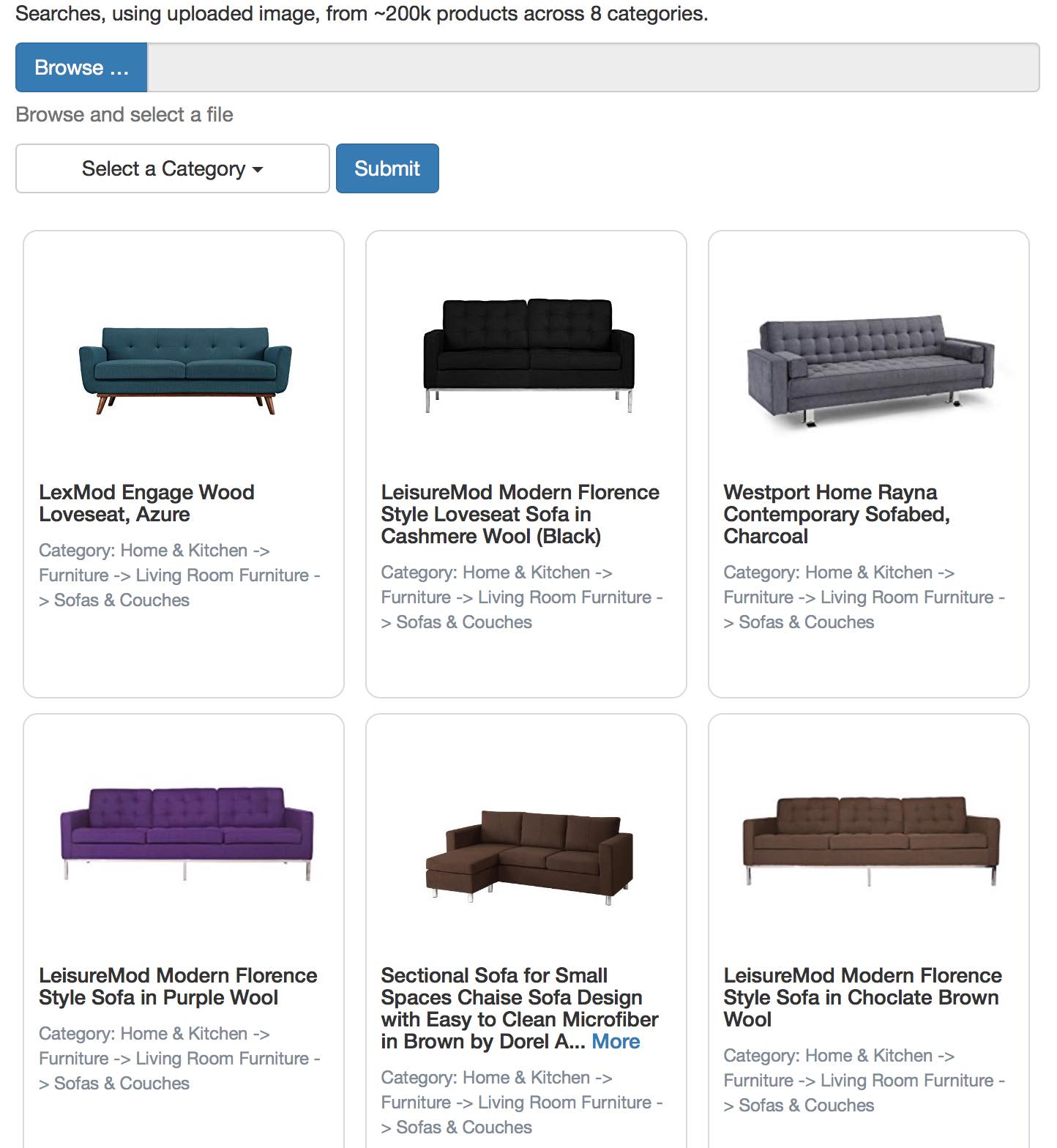

My first self-learning project will always be my favorite. For that project, I used data scraped from Amazon to build an ML system to classify products—into thousands of categories—based on titles. The machine learning approach was Bayes-ic but it worked well (top-3 accuracy of 0.965). It eventually evolved to include image classification and image search. (Parts of it also got used at Lazada to help sellers categorize products.)

I’m proud of that effort because when I started, I knew next to nothing about machine learning and programming and had to stretch (a lot) to get it working. To train and deploy the model, I had to learn how to work with AWS EC2, S3, and Route53. To serve my model, I had to learn how to wrap it in a Flask app, build a basic frontend with Bootstrap and HTML, and muck around with Nginx. For image classification and search, I had to learn how to apply deep learning for computer vision, as well as how to use pre-trained models (e.g., VGG, ResNet) for transfer learning.

I spent many weekends struggling with Python, Theano, HTML, AWS, etc. The tremendous effort I poured into the project probably explains why I’m so proud of it. What I learned from it turbocharged my learning, and the gains also spilled into my work productivity.

Finding similar looking sofas with "dimples".

You have written many articles across an eclectic range of topics, from what a data science portfolio shows, to maintaining models in production, and a series of guides to ML learning systems in industry. What is your process for writing and creating so prolifically?

I have a list of potential topics in my notes somewhere. Whenever inspiration for an article strikes, I add to it—it’s really just a list of titles. For example, a friend recently lamented about the difficulty of educating stakeholders about what machine learning can and cannot do—I thought it would be an interesting topic and added it to the list. I also get questions via my topic poll and AMA and chats with friends.

Just jotting the title on that list makes me pay attention to it. And when I come across relevant material or references, I add them under those titles. Titles can stay on this list for months or years. Probably half of them won’t evolve into articles, because I might find that there’s nothing novel I can contribute to the discussion.

Once a week, I pick a title I’m most interested to explore. Early in the week, I write a basic outline of the piece, mostly using the Why, What, How structure (the other main form being listicles). The aim is to pour whatever comes to mind into that draft and not concern about language, content, sources, etc. This takes about one to two hours.

The next day, I scan the outline and try to rewrite it from memory. This forces me to reorganize the ideas. Sometimes, details or entire sections get left out—this suggests that they weren’t essential to the thesis. Nonetheless, I compare my previous and new outlines to make sure the new outline’s better, and I didn’t exclude anything important. I iterate on the outline once or twice—on separate days—taking one to two hours each. But if my thinking on that topic is especially muddy, I might need three or four iterations.

Regardless of what state the outline is in, I start converting my outline into prose on Saturday morning. By this time, the outline’s fairly detailed and it’s a matter of rewriting bullet points into sentences and paragraphs. This takes anywhere from five to fifteen hours. Once that’s done, I edit it once for grammar and spelling, and another time for readability. I always ship by Sunday night.

What are the things you’ve enjoyed most about writing such articles?

I enjoy how writing forces me to research and learn and summarize my thinking, especially the more technical pieces like teardowns and surveys. Writing’s been an invaluable learning technique. When I try to write, gaps in my knowledge appear. This leads to more research and learning before I can complete the piece.

I also enjoy how writing helps me reflect, collect, and structure my thoughts. This mostly applies to pieces where I write from experience, such as the benefits of reading papers and how to maintain ML systems in production. When the need arises for me to preach about these topics again, I’d have thought about it deeply and even have a URL to share.

What I enjoy most about writing is how scalable it is. Writing is O(1). It takes the same effort to write for one person or 10,000. When I’ve been asked the same question multiple times, I try to write about it (e.g., how to write ML design docs, the difference between various data/ML roles). As a bonus, my writing has made me new friends such as other ML practitioners, VCs, startup founders, etc, and has led to several interesting opportunities.

+1 for a blog post about your experience on this.

— Dr. L λR Y-54 (@visenger) December 10, 2020

The tweet above led to a write-up on real-time recommender systems.

What kind of writing in DS/ML would you like to see more of?

(From TDS: The context of this question is the general sense that most tech articles or tutorials published online are terrible. To improve that, one of my goals as an editor is to seek out & publish “ghost knowledge” from experienced practitioners versus the marginal blog post about a tutorial or student portfolio project.)

I’m a practical person and enjoy practical content. I like content where I can learn from other’s successes or mistakes and apply them at work. That’s why I curated the applied-ml repository. Reading how other companies approach and solve ML problems has been helpful when I’m designing my own ML systems. Nonetheless, I wish people would share more about what didn’t work. Maybe not every failed experiment, but general lessons about how not to approach a problem would be a great timesaver.

I also enjoy papers where authors include ablation studies. It’s amazing (and slightly sad) how much we can do with deep learning yet know so little about how it learns.

Along the same vein, I enjoy content about the nuts and bolts of ML systems where authors share how machine learning, engineering, and product come together to build something that helps people. We need more of such case study-type content to help the community learn how to apply ML effectively.

You have a pretty comprehensive writing philosophy. What is your advice to readers looking to improve their writing and also stand out online as more and more engineers get into writing?

Start. Just start.

I usually get two responses to that: “What if my writing is bad?” or “But I haven’t found my niche yet!”

Don’t worry about your writing being bad. The truth is, approximately zero people are going to read your first dozen pieces (well, unless you write on a big publication like Towards Data Science 😉). So dance like nobody’s watching, and write like nobody’s reading. At the beginning, you’re writing to practice.

Similarly, you won’t find your niche by just thinking about it. You find it by writing a lot, putting it out there, and seeing what resonates. And maybe after 20 pieces or so, you look back and see themes emerge from your body of work. You can only connect the dots looking backwards—the best way to do this is to have a lot of dots by shipping consistently.

You can’t connect the dots looking forward; you can only connect them looking backwards. — Steve Jobs

That said, there are a few lessons I’ve learned about writing to make it easier and more enjoyable. I’ve written about it in detail previously and will share the key points here:

- Writing is 80% preparation, 20% writing.

- Writing is hard for everyone.

- You don’t find your niche; your niche finds you.

- Your writing is nothing if not useful.

- Your voice is not how you write; your voice is you.

I think it’s okay if your work doesn’t stand out online. Writing is both a multiplayer and single-player game. It’s multiplayer in the sense that other people can engage with and share your writing, give you feedback, and befriend you. It’s single-player because when you write, you’re learning, thinking deeply, and clarifying your thoughts. Regardless of multiplayer outcomes, you always win the single-player game.

Many things in life are both single-player & multi-player games.

— Eugene Yan (@eugeneyan) May 5, 2021

Writing for example. Getting feedback and making new friends is multi-player; learning and clarifying your thoughts is single-player.

Regardless of multi-player outcomes, you always win the single-player game.

What are your hopes for the DS/ML community in the next few months/couple of years?

I’d like to see the community thinking more about ML systems—instead of just ML models—and the production side of things. I get the sense that, right now, most people consider training and achieving good validation results as the finish line (à la Kaggle).

However, there’s a lot more that needs to be done for your model to make an impact. You have to set up data pipelines, orchestrate and schedule them, deploy, test, monitor, scale infra for latency and throughput, have policies for data privacy, security, on-call, etc. All this takes time, easily 20 - 100x the time to train and validate a prototype model.

Here's a datapoint from a Quora answer: "For one project I worked on: research quality prototype: 2 person-months. Production-quality version: 117 person-months. The prototype had some extra features not seen in the production version."https://t.co/tqjlTjDgGf

— Eugene Yan (@eugeneyan) April 29, 2021

"The prototype had extra features not seen in the production version."

And after you’ve shipped your ML model, it’s just the starting line. You’ve to maintain it sustainably. Imagine shipping an ML app once every quarter. After a year, you’ll have four apps to maintain—how would you do that without too much operational burden?

I also wish more data scientists focused on problem formulation. For example, to identify abuse on social networks, you could frame it as a supervised or unsupervised problem. A supervised approach will need high-quality labels. An unsupervised approach has various flavors, such as outlier detection (e.g., isolation forests) or network analysis (e.g., graph clustering of bad actors).

How we frame problems has an outsized impact on outcomes. This also applies to choosing the right positive and negative labels—negative sampling is an obscure art. It’s also useful to think about how your loss function correlates with your offline validation metrics (recall@k, nDCG), and how that correlates with online A/B testing metrics (clicks, conversion) and downstream business metrics (revenue, customer lifetime value).

If you found this useful, please cite this write-up as:

Yan, Ziyou. (Jun 2021). Towards Data Science - Author Spotlight with Eugene Yan. eugeneyan.com. https://eugeneyan.com/speaking/tds-author-spotlight/.

or

@article{yan2021towards,

title = {Towards Data Science - Author Spotlight with Eugene Yan},

author = {Yan, Ziyou},

journal = {eugeneyan.com},

year = {2021},

month = {Jun},

url = {https://eugeneyan.com/speaking/tds-author-spotlight/}

}Share on:

Browse related tags: [ career writing datascience ] or

Join 11,800+ readers getting updates on machine learning, RecSys, LLMs, and engineering.