A Spark of the Anti-AI Butlerian Jihad (on Bluesky)

Recently, a dataset of 1M Bluesky posts unexpectedly sparked backlash from the Bluesky community. This incident uncovered strong anti-AI sentiment among Bluesky accounts, leaving the AI community feeling unwelcome on Bluesky. This write-up reflects on what happened, hypotheses on why it happened, and how the data/AI community responded.

• • •

Bluesky, 26th November 2024: A developer shared about the one-million-bluesky-posts they had collected from Bluesky’s public firehose API. It contained post text, metadata, and information about media attachments like alt text, and was hosted on Hugging Face.

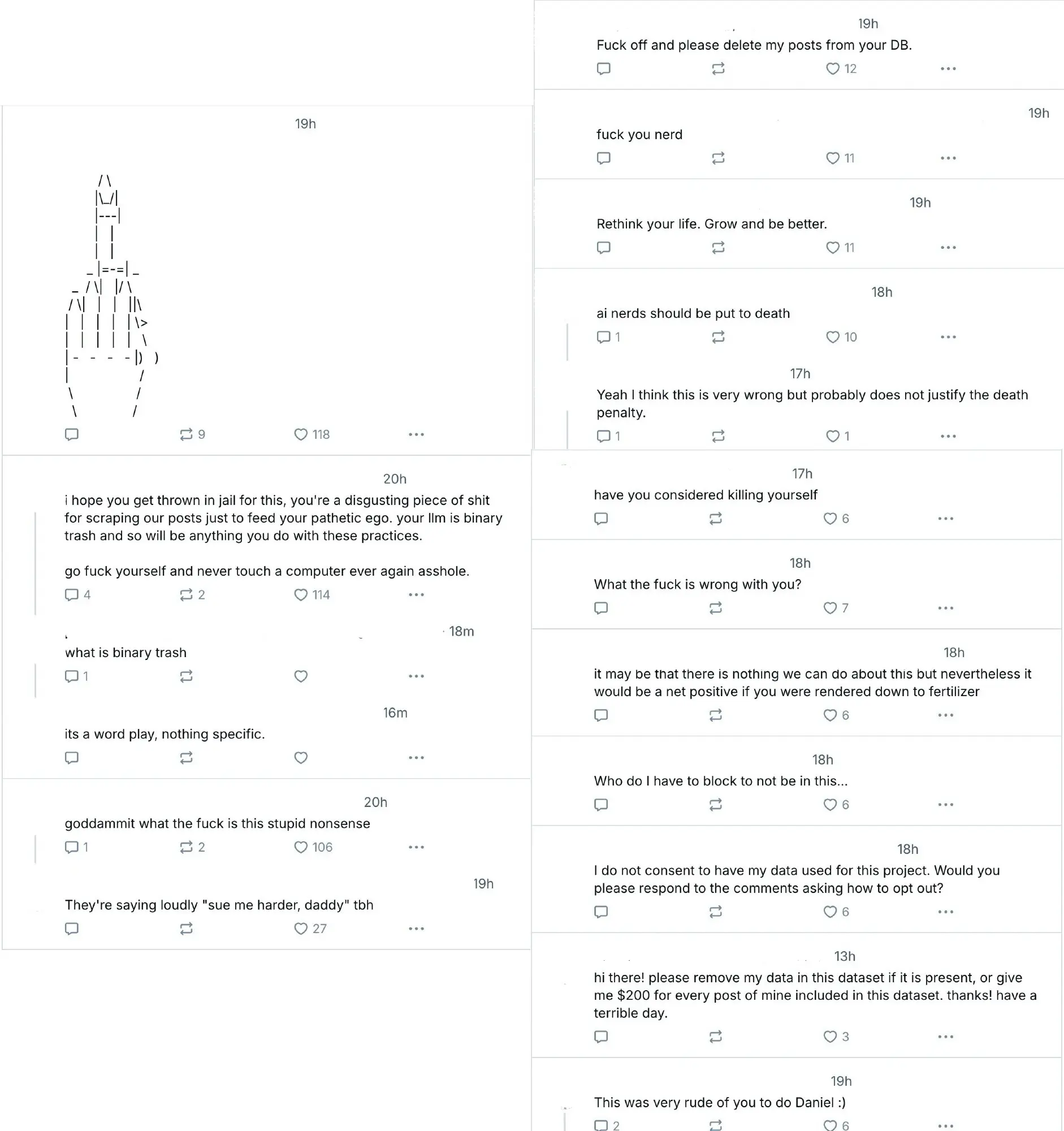

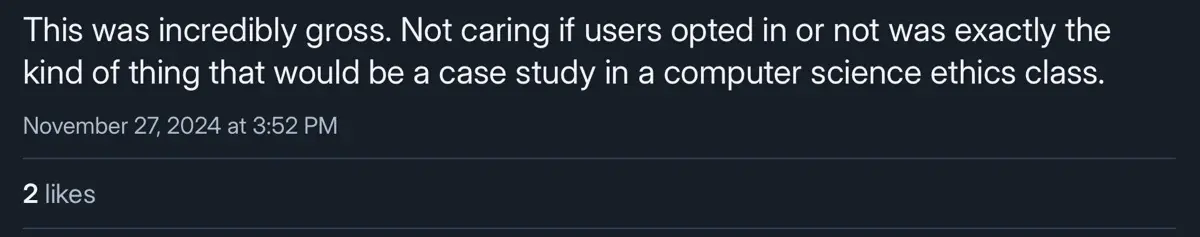

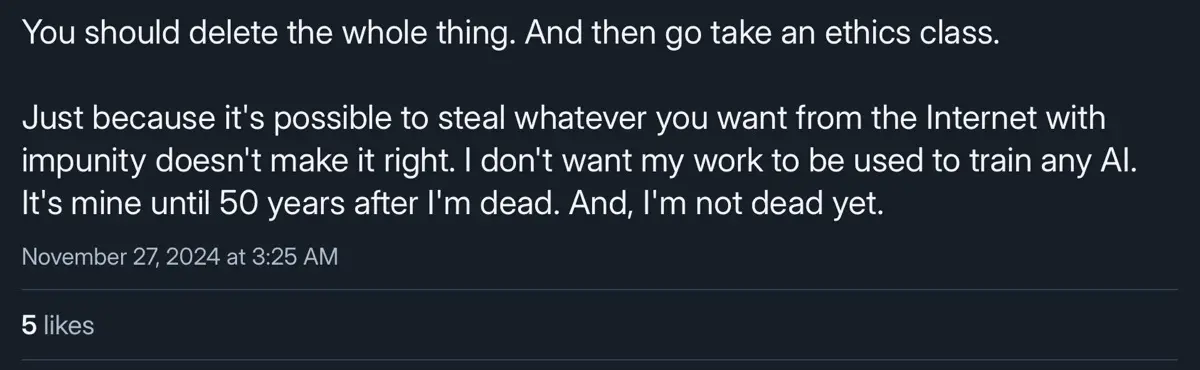

However, many Bluesky accounts reacted with aggression. There were profanity-laced comments. There were accusations of theft, privacy violations, and unethical behavior. There were calls for legal repercussions. And there were death threats.

Negative reactions on the dataset, many of which have since been removed (source)

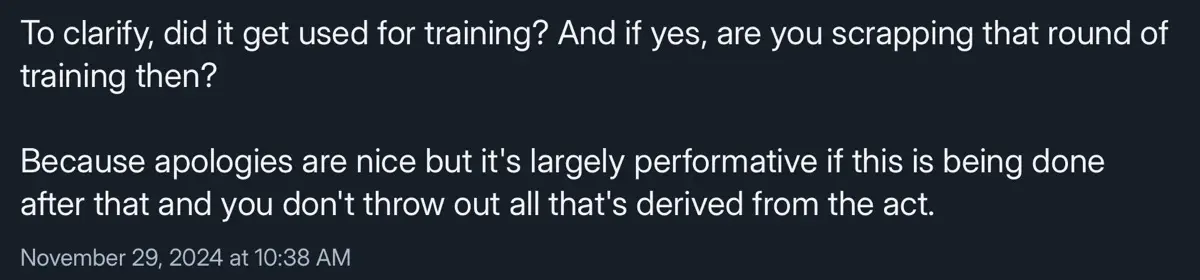

Within 12 hours of sharing the dataset, the developer took it down. They followed up with a post on Bluesky acknowledging that the dataset collection lacked sufficient transparency and consent, and apologized.

IMHO, the intentions were positive and the transparency was commendable. Such datasets enable research on social media patterns, content moderation, and training language models. The dataset description also explicitly prohibited problematic uses like building bots, generating fake content, or extracting user’s personal information. (To be frank, any well-intentioned data/ML/AI practitioner could have compiled a similar dataset.)

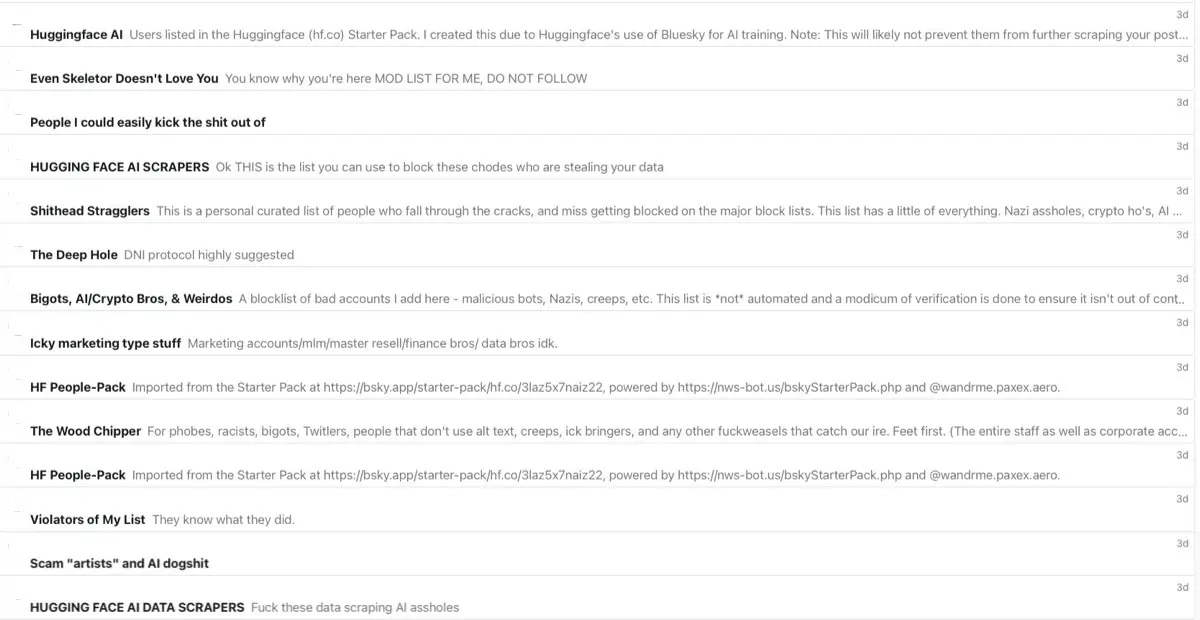

Regardless, the dataset’s release gave us a glimpse of a potential Anti-AI Butlerian Jihad on Bluesky. Because of the developer’s affiliation with Hugging Face, many of their researchers and developers found themselves added to Anti-AI blocklists. On Bluesky, users can create lists to curate feeds or mass-mute/block accounts. It’s likely many accounts discussing ML/AI topics were added to such lists and blocked; I know I was.

Blocklists targeting AI and Hugging Face accounts

In a somewhat cheeky response, new datasets emerged within 24 hours. One developer posted (on Bluesky) about releasing two-million-bluesky-posts; as a result, they had their account suspended and then reactivated by Bluesky’s moderation team. Others shared 20-million-bluesky-posts, and bluesky-1M-metaposts that discussed the original dataset. Overall, this demonstrated the ease of collecting bulk data from Bluesky’s API.

Aside: It’s worth noting that an even larger dataset containing “the complete post history of over 4M users (81% of all registered accounts), totaling 235M posts” was published six months ago. This makes the 1M dataset look tiny in comparison. The 235M dataset work was supported by the EU, and organizations funded by the EU.

• • •

To better understand the intense backlash, I dived deeper into the critical comments and examined the accounts that made those comments.

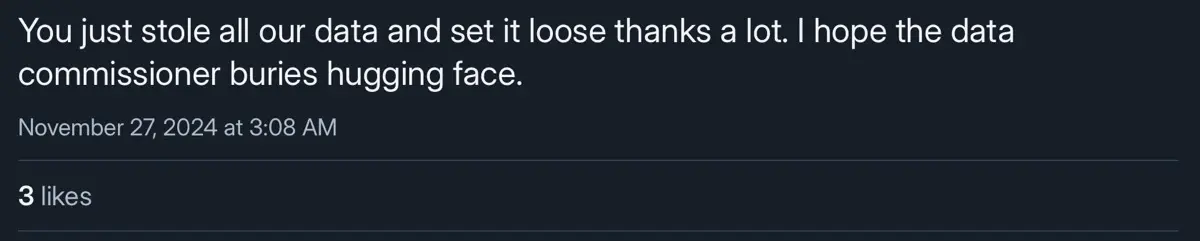

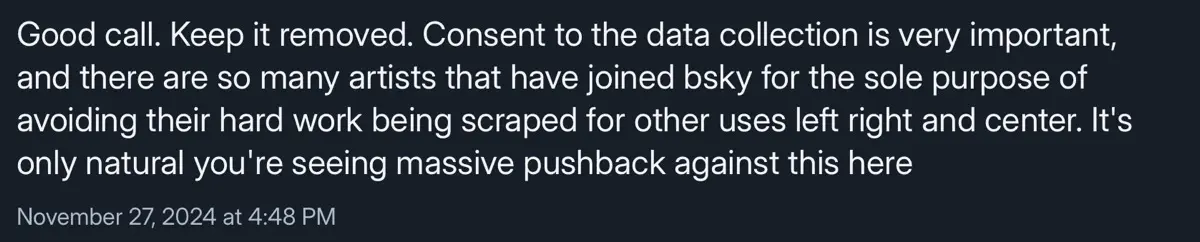

At first glance, the primary issue seemed to be the lack of consent in data collection. Some comments expressed anger at having their data “scraped” or “stolen” without permission. (Note: The accusation is incorrect—there was no scraping or stealing involved. Posts on Bluesky are public and the Bluesky firehose API is open for all to consume.)

Responses objecting to the dataset due to lack of consent

However, as I explored further, two clusters of concerned accounts took shape:

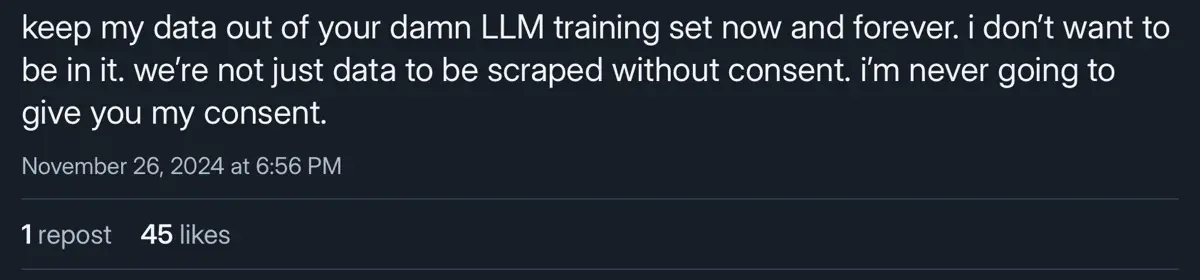

- Accounts that explicitly object to their data being used to train AI models

- Accounts that post NSFW/mature content

The first group’s comments often mention not wanting their data in “LLM training sets” or not having their creative work used to train AI without consent. Some demanded the deletion of the dataset and any AI models trained on it. Notably, one comment raised that many artists joined Bluesky specifically to avoid having their work “scraped” for training AI, hinting at the larger tension around AI’s impact on creative professions.

Responses objecting to the dataset as training data for AI models

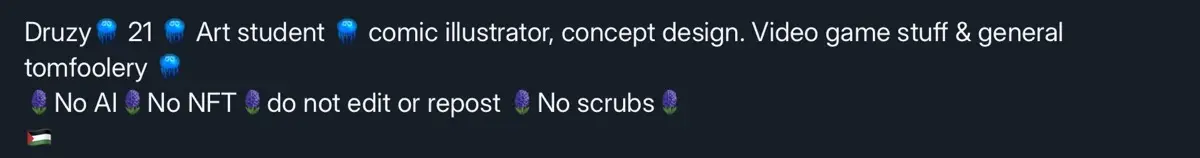

To validate this, I visited a sample of the critique accounts and found at least a dozen profiles of writers, artists, musicians, and other creatives, some of whom added explicit anti-AI declarations to their bios.

Profiles of writers, artists, musicians, and other creatives with explicit anti-AI declarations

I also discovered a second cluster of accounts posting adult-oriented content, typically identified by the 🔞 emoji in their profiles. For this group, the concern may have been having their sensitive posts stored externally without the ability to delete them.

Profiles of accounts posting NSFW/mature content with 🔞 emoji

Note: These two clusters only accounted for 10 - 20% of the accounts criticizing the dataset. Most accounts had more generic profiles with posts about everyday life content.

In response to the backlash, many in the data, ML, and AI community shared thoughtful perspectives on the complex issues of consent, using AI for good, and why it happened.

- The nuances of consent for public social media data (source)

- How consent may not apply to online data (source)

- The inherent public nature of the Bluesky firehose (source)

- The potential benefits of AI, such as combating spam (source)

- The inevitability of Bluesky needing to train its own AI models as it scales (source)

- The relative insignificance of 1 - 2M posts for training LLMs (source)

- Concerns about the original developer being bullied (source)

- The hypothesis that early accounts were disproportionately anti-AI (source)

Several folks in the AI community also (publicly and privately) shared their concerns about the potential for escalating anti-AI sentiment on Bluesky, with some unfortunately expressing reluctance towards using Bluesky for now.

I really liked the community before this all blew up. Less crap cluttering my feed, just quality posts from HF employees and researchers (the only people who really moved there)

All the examples of Bluesky is pure toxicity from what I have seen. I wanted to check it out in the beginning but feel no desire to do so after seeing the people on there

This sort of mentality has been going on in Tübingen (Germany) for many years. Despite there being some of the brightest AI minds in Europe, locals strongly oppose any development.

Everything that happened on bluesky is making me put a pause on my own participation. I thought it was going to be the next data twitter but now I think I don’t need that anti-ai hate in my life.

anyway if you need someone to talk to about it - just let me know. And I’m not saying this randomly, Me and various EleutherAI folks & gang has been hit on this, multiple times. Also, I know with the direction I’m heading towards, I will be on the target board as well, for more of it. Inevitably.

Despite the unpleasant events, I understand it comes from a place of concern and fear for many. To alleviate this, we need open, empathetic dialog to build mutual understanding. Even now, several AI researchers and practitioners continue to engage in good-faith education about AI on Bluesky. Nonetheless, it’s unclear if critics are open to engage, especially with the widespread blocking of AI-associated accounts.

For now, I plan to hang out where the community is, and since the community’s on both Twitter and Bluesky, that’s where I’ll be. Given recent events, if you do want to discuss AI on Bluesky, it’s dangerous to go alone—take this 📜. You can subscribe to it and either mute or block accounts known to be anti-AI and potentially abusive.

• • •

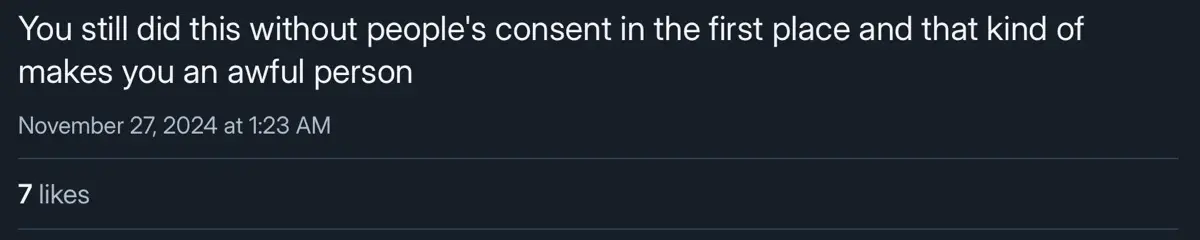

P.S., Unfortunately, I too became a target in this kerfuffle. To reduce the negativity on my feed, I started curating a moderation list of accounts that displayed explicitly anti-AI and abusive behavior. To my surprise, one of the large accounts on the list shared it with their ~20k followers (as a badge of honor?), putting me squarely in the spotlight.

A large account lol-ing about their presence on the moderation list

Soon, I started receiving anti-AI comments, even on unrelated posts like a Thanksgiving message. There were also accounts asking to be put on the list 🤷

Anti-AI comments and requests to be added to the moderation list

As someone whose writing occasionally hits the front page of Hacker News (aka trolling as a service), this negativity doesn’t faze me much. Nonetheless, it has me thinking about how to turn the list into a self-serving web app that allows accounts to opt themselves in.

Thanks to Xinyi Yang, Hamel Husain, Vibhu Sapra, Eugene Cheah, Zach Mueller, Alex Volkov, and Nathan Lambert for reading drafts and encouraging me to publish this.

If you found this useful, please cite this write-up as:

Yan, Ziyou. (Dec 2024). A Spark of the Anti-AI Butlerian Jihad (on Bluesky). eugeneyan.com. https://eugeneyan.com/writing/anti/.

or

@article{yan2024anti,

title = {A Spark of the Anti-AI Butlerian Jihad (on Bluesky)},

author = {Yan, Ziyou},

journal = {eugeneyan.com},

year = {2024},

month = {Dec},

url = {https://eugeneyan.com/writing/anti/}

}Share on:

Browse related tags: [ llm ai misc ] or

Join 11,800+ readers getting updates on machine learning, RecSys, LLMs, and engineering.